Where does the "deep learning needs big data" rule come from

Data Science Asked on December 25, 2020

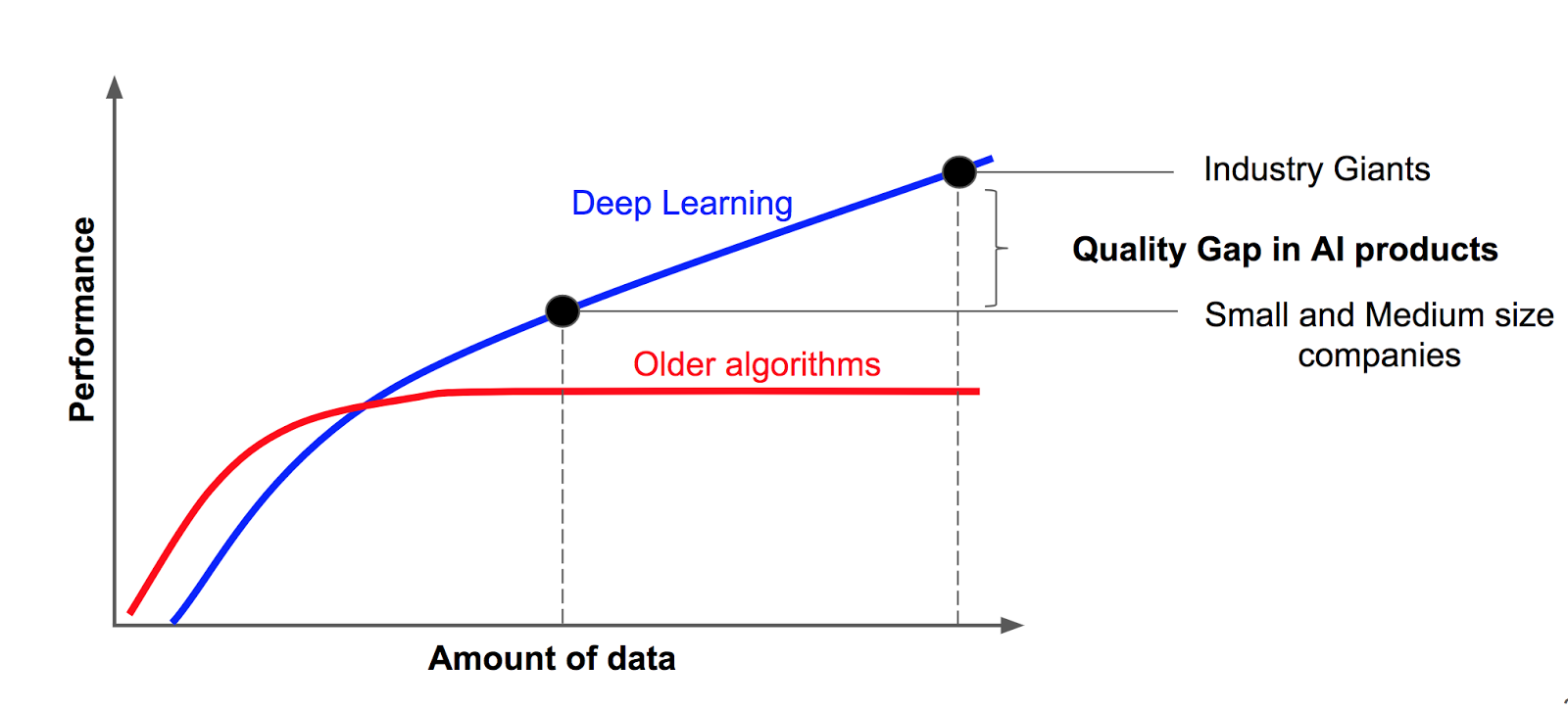

When reading about deep learning I often come across the rule that deep learning is only effective when you have large amounts of data at your disposal. These statements are generally accompanied by a figure such as this:

The example (taken from https://hackernoon.com/%EF%B8%8F-big-challenge-in-deep-learning-training-data-31a88b97b282 ) is attributed to a ‘famous slide from Andrew Ng’. Does anyone know what this figure is actually based upon? Is there any research that backs up this claim?

2 Answers

The original slide in question "Scale drives deep learning progress" is possibly what you currently can find at https://cs230.stanford.edu/files/C1M1.pdf (page 13). It may be roughly interpreted with "low bias learners [in that plot, larger neural networks] tend to benefit from more training examples".

Answered by Davide Fiocco on December 25, 2020

The main reason is that in deep learning the number of training parameters are so many and there is a fact that for each parameter you need at least $5$ to $10$ data to have a good prediction. The reason is a bit complicated to explain but it is related to pack learning and if you insist to know why, I can tell you that in the error term for the test data, you have an overfit term which grows with the number of sample size if your training model is a kind of hypothesis that increases when the number of data increases. In hypothesis with the growth of $O(2^n)$ it is impossible to make the generalisation error same as training error, such as 1NN on the contrary, hypothesis with the growth $O(n^c)$ which are limited to polynomials can have an overfit which can be diminished by increasing the size of training data. Consequently, if you increase the size of your data you can have better generalisation error. Deep learning models obey the second growth manner. The more data you have, the better generalisation you have.

Answered by Media on December 25, 2020

Add your own answers!

Ask a Question

Get help from others!

Recent Questions

- How can I transform graph image into a tikzpicture LaTeX code?

- How Do I Get The Ifruit App Off Of Gta 5 / Grand Theft Auto 5

- Iv’e designed a space elevator using a series of lasers. do you know anybody i could submit the designs too that could manufacture the concept and put it to use

- Need help finding a book. Female OP protagonist, magic

- Why is the WWF pending games (“Your turn”) area replaced w/ a column of “Bonus & Reward”gift boxes?

Recent Answers

- Joshua Engel on Why fry rice before boiling?

- Lex on Does Google Analytics track 404 page responses as valid page views?

- Jon Church on Why fry rice before boiling?

- haakon.io on Why fry rice before boiling?

- Peter Machado on Why fry rice before boiling?