How to deal with ternary Output neurons in the Output classification layer of a simple feedforward Neural Net?

Data Science Asked by Bünyamin Özkaya on April 30, 2021

I was looking into the multi-label classification on the output layer of a Neural Network.

I have 5 Output Neurons where each Neuron can be 1, 0, or -1. independent of other Neurons.

So for example an Output would look like :

| Output |

|---|

| 1 |

| 0 |

| -1 |

| 0 |

| 1 |

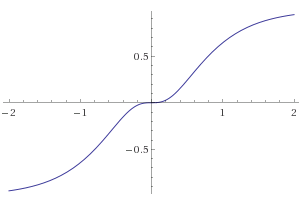

I used to take the tanh- activation function and partition the neuron into 3 ( y<-0.5, -0.5<y<0.5, y>0.5) to decide the class in each of those neurons after the prediction. See Figure below.

Question:

Are there any better alternatives on how to approach this ternary Output activation?

I stumbled upon this blog post regarding n-ary activation functions which I found very interesting.

I think the newly suggested would make the partitioning mentioned above much more meaningful!

Or do you think I should one-hot-encode my whole system such that a neuron can only have a value of 0 or 1 and then just use sigmoids activation with a threshold of 0.5?

One Answer

The typical approach for this kind of scenario is to handle the output as a discrete element and have each output be a probability distribution over the discrete output space, that is, for each output you generate 3 numbers between 0 and 1 where the sum of them adds up to 1. You normally obtain this by generating for each position a vector of 3 elements in $mathbb{R}$ instead of a single element and then passing it through a softmax function.

The typical loss function for that is the categorical cross-entropy, which receives the probability vector (although depending on the implementation and the parameters, it may receive the vector before the softmax, which are called the "logits"), and the index of the expected discrete output.

This is the usual approach for multi-class classification that you will find in most tutorials, like this one.

Correct answer by noe on April 30, 2021

Add your own answers!

Ask a Question

Get help from others!

Recent Questions

- How can I transform graph image into a tikzpicture LaTeX code?

- How Do I Get The Ifruit App Off Of Gta 5 / Grand Theft Auto 5

- Iv’e designed a space elevator using a series of lasers. do you know anybody i could submit the designs too that could manufacture the concept and put it to use

- Need help finding a book. Female OP protagonist, magic

- Why is the WWF pending games (“Your turn”) area replaced w/ a column of “Bonus & Reward”gift boxes?

Recent Answers

- haakon.io on Why fry rice before boiling?

- Peter Machado on Why fry rice before boiling?

- Joshua Engel on Why fry rice before boiling?

- Lex on Does Google Analytics track 404 page responses as valid page views?

- Jon Church on Why fry rice before boiling?